Blog from Ed Hedley – Community of Practice 6

Terms of Reference for an Evaluation – Towards Better Practice

The sixth Community of Practice in the UK Evaluation Society’s pilot series on ‘Commissionership – towards better practice’ focused on the Terms of Reference for an Evaluation.

The discussion centred on the fundamental role a good quality terms of reference plays in launching a successful evaluation and, in particular, the difficult but necessary balance commissioners must strike between being specific whilst also leaving some room for flexibility.

In a lively session, the focus was on some of the key challenges commissioners and evaluators face in drafting and responding to terms of reference and on some of the potential ways we might be able to meet in the middle. The following issues were highlighted as those typically encountered in a terms of reference:

Context

Both commissioners and evaluators felt that the context section plays a fundamental role in helping to shape evaluators’ understanding of the intervention to be evaluated. As a section, it is sometimes given short shrift, which makes it more difficult for evaluators to develop a sensible and well thought-out proposal.

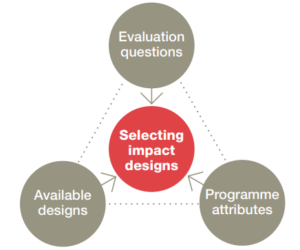

For evaluators, it is their understanding of the intervention context, alongside the questions being asked, which together help them make an informed selection of designs and methods. This is explicitly recognised in the Bond impact evaluation ‘design triangle’ (1) , which also highlights that context should go beyond a generic description of the programme and its situation to include more specific information on ‘programme attributes’. This level of detail was echoed by participants, who highlighted that evaluators ideally look to the context section for such information as the history of the intervention, its structure and how it’s managed.

The Bond Design Triangle

(1) Bond 2022 (https://www.bond.org.uk/wp-content/uploads/2022/03/impact_evaluation_guide_0515.pdf)

Purpose of the evaluation:

Evaluation purpose has been discussed previously during this series (second Community of Practice.) This time, participants again highlighted the importance of ‘purpose’ in conveying the bigger picture to evaluators; in many respects it is the logical next step beyond context (‘why do we want to evaluate this intervention?’)

Evaluators highlighted the challenges that can arise if emphasis is placed on both accountability and learning; the challenge is not that the focus is on one or the other per se, but that the overarching purpose loses clarity. Participants highlighted that clarity of purpose is important: the design, management and messaging to both client and evaluand are very different for an accountability-focused evaluation to a learning focused one. This reflection was shared by commissioners who recognised that it is all too easy to write a lot of different evaluation purposes into a ToRs without thinking carefully about their implications.

Evaluation questions

Evaluation questions naturally flow from purpose. A key challenge associated with evaluation questions discussed during the session was how to keep the list of questions to a manageable number. From the perspective of evaluators, a very long and unmanageable list can create challenges in terms of the depth and robustness to which they can be explored; some questions have very different resource implications than others (causal questions often require more resources than questions about relevance, for instance).

Commissioners have a key role to play in managing the expectations of stakeholders and in keeping the number and resource implications of evaluation questions in mind. Participants recognised that this can be easier said than done, however, especially where the list is influenced by senior stakeholders. In such cases, working through the list with evaluators and providing evaluators with some latitude to make changes may give commissioners additional credibility to manage the internal relationships.

Approaches and methods

The final section discussed in the session covered evaluation approaches and methods. Here, commissioners often try to give evaluators as much flexibility as possible; the phrase “we don’t want to prescribe the methods we’d like to see here” is commonly used in terms of reference. However, as participants noted, this is often followed by a “but…” or a “however..” It is common for commissioners to push for innovative methods, for instance (previously discussed in session two). Both commissioners and evaluators agreed that a desire for innovative methods shouldn’t be the starting point; tried and true methods may be more appropriate to meet an evaluation’s purpose. And besides, ultimately evaluation users care more about clear and timely findings rather than the specifics of the methodology used.

This is the last in the ‘Commissionership’ pilot series.

Thank you for all your feedback and constructive suggestions about where further to take this.

Watch this space!